my Ndivia MX330 2G error : RuntimeError: CUDA out of memory. Tried to allocate 2.00 MiB (GPU 0; 2.00 GiB total capacity; 1.67 GiB already allocated; 0 bytes free; 1.74 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

Edit: nvm, I know what's wrong now, the software will not allow rendering images at a bigger resolution than 512x512, as soon as you try something more HD will give the error... So in conclusion, this is just a dummy testing tool. Not wort the time downloading.:::::::::::::

Worked really well up until I got the following error. Now it occurs every time, regardless of my chosen settings. I know it's not my RAM or VRAM, as I'm running 32gb of RAM and my 3070 has 8gb of VRAM. Any known workarounds/fixes?

Error message:

Traceback (most recent call last):

File "start.py", line 363, in OnRender

File "torch\autograd\grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "diffusers\pipelines\stable_diffusion\pipeline_stable_diffusion.py", line 141, in __call__

File "torch\nn\modules\module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "diffusers\models\unet_2d_condition.py", line 150, in forward

File "torch\nn\modules\module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "diffusers\models\unet_blocks.py", line 505, in forward

File "torch\nn\modules\module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "diffusers\models\attention.py", line 168, in forward

File "torch\nn\modules\module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "diffusers\models\attention.py", line 196, in forward

File "torch\nn\modules\module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "diffusers\models\attention.py", line 254, in forward

RuntimeError: CUDA out of memory. Tried to allocate 2.25 GiB (GPU 0; 8.00 GiB total capacity; 4.40 GiB already allocated; 0 bytes free; 6.60 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

1. is this safe for my device? some comments says their gpu died after using this because their gpu are overclocked. I have an RTX 3060 12GB OC Edition by Zotac, its OC from the box so im not sure if its the same as manual OC.

Can say an exact guess but it'll take much longer. Let's just download the package and try it. But you need at least 8GB VRAM to get 512x512 px images. less then this size is pretty small and useless.

torchvision\io\image.py:13: UserWarning: Failed to load image Python extension:

torch\_jit_internal.py:751: UserWarning: Unable to retrieve source for @torch.jit._overload function: <function _DenseLayer.forward at 0x00000292B4667D30>.

warnings.warn(f"Unable to retrieve source for @torch.jit._overload function: {func}.")

torch\_jit_internal.py:751: UserWarning: Unable to retrieve source for @torch.jit._overload function: <function _DenseLayer.forward at 0x00000292B468F0D0>.

warnings.warn(f"Unable to retrieve source for @torch.jit._overload function: {func}.")

I recently downloaded the Stable Diffusion for PC and git a black image. I was advised to replace my graphic card.

After replacing my NVIDIA GeForce 1650 card with Gigabyte GeForce RTX 3060 Ti Eagle OC 8G I tried to run Stable Diffusion on my PC but got the following error:

torchvision\io\image.py:13: UserWarning: Failed to load image Python extension:

torch\_jit_internal.py:751: UserWarning: Unable to retrieve source for @torch.jit._overload function: <function _DenseLayer.forward at 0x0000019888E120D0>.

warnings.warn(f"Unable to retrieve source for @torch.jit._overload function: {func}.")

torch\_jit_internal.py:751: UserWarning: Unable to retrieve source for @torch.jit._overload function: <function _DenseLayer.forward at 0x0000019888E12310>.

warnings.warn(f"Unable to retrieve source for @torch.jit._overload function: {func}.")

Sound like the tool is not allowed to create the config file. did you put it into "programs (x86)"? Try moving it to a temporary folder on your harddrive and see if it still happens

The Worst SD-GUI, I have tested so far! A lot of errors and bad performance! Absolutely not optimized at all!

Lacking a lot of features like RealESRGAN, GFPGAN, selectable gpu device, txt2img & img2img samplers, on the fly model switching, image viewer, masking, custom concepts, img2img, img2prompt, and so on... Absolute waste of time and disk space!!!

Yeah, 0.1 is pretty awful, but the paid version is currently on 0.41 and it's practically magical in terms of performance and speed, and includes a good chunk of the stuff you've listed. Definitely has the lowest VRAM usage out of all the AI GUIs too I think, especially since they added the low VRAM usage toggle. I'm pretty sure some people are rendering with 3GB VRAM and making pretty solid images at a decent speed, too.

I'd really like to know when/if GRisk ever plans to update the free version, or if it's just doomed to stay as some janky unfinished load of WIP to serve as a "demo" for the paid version.

torchvision\io\image.py:13: UserWarning: Failed to load image Python extension:

torch\_jit_internal.py:751: UserWarning: Unable to retrieve source for @torch.jit._overload function: <function _DenseLayer.forward at 0x000001C0E1363820>.

warnings.warn(f"Unable to retrieve source for @torch.jit._overload function: {func}.")

torch\_jit_internal.py:751: UserWarning: Unable to retrieve source for @torch.jit._overload function: <function _DenseLayer.forward at 0x000001C0E13638B0>.

warnings.warn(f"Unable to retrieve source for @torch.jit._overload function: {func}.")

Can anyone get the inpainting working? I tried several way doesn't seem to work. Maybe it's only partially implemented as I don't see a way to upload the original file and the mask.

WARNING: this software killed my gpu. gtx 1070. been using it for 6 years with no issues. used this to render ~100 images and it's completely dead. so uh, maybe don't run this with your card overclocked? idk, weird

Can anyone with a 3090 Ti confirm their highest resolutions possible as a result? Looks like I can max out at 768 by 512 on my 2080 Ti. Any examples would be appreciated.

Thanks for confirming. Shortly after I ended up reading a ton of articles and it appears it wouldn't matter much anyways, as they were trained at 512x512 resolution to begin with, which makes sense as to limits and images tending to overlap or duplicate when you set the output higher. And you're right, 768x512 is really perfectly fine, I was just curious but knowing more now realize that there wouldn't be much benefit of a 3090 Ti over a 3080 Ti... at least, yet.

I can do 1080x1080 on the updated version of the GUI using my 8GB 2070 Super, and still have enough VRAM left over to fiddle around in Blender while the GUI does its work.

It's kinda annoying that they're paywalling such big updates, I hope they plan to update the Itch version soon so people don't have to pay $10 a month for access to a bunch of different services they probably wont use, just to get the bugfixes, content, and optimization of the newer versions of the GUI.

Doesn't really work. Worked fine once, then now every time I try to render something it just keeps saying CUDA out of memory, and this persists even after a hard restart. I have a 2080ti with 11G of ram, and the program can't even allocate 3GB to render a file. It seems the program is allocating memory that it never releases after its done, and as such each subsequent load hogs up GPU memory.

I don't think the program, or the Stable Diffusion implementation, is ready for prime time yet. The limit of 512x512 is about as far as I can render, which is also really low resolution - and even with Gigapixle the quality is subpar to other programs like Midjourney.

Sorry to hear it's not working for you. I've got a RTX 2070 works great (8GB of VRAM). Maybe Midjourney is a better option for you if you don't mind using Discord.

I love this tool so much that I decided to become a Patron! It's so useful to have a separate txt file with the settings used for each generated image (as opposed to have it in the file name of the image like the Dreamstudio). I'm just downloaded GUI version 0.41 and I was happily surprised to find out I can generate larger images, samples per prompt works and there is an upscaler as well as inpainting!!! (although I haven’t figure out how it works)

Anyway, I thought I’d share this since more people might decide to support the development of this GUI as it’s not advertised anywhere what’s in the Patreon version (or at least I didn’t know, I just thought I was giving money to say thank you!).

Are there plans to add GPEN or GFPGAN to fix faces? That would be amazing, along with the ability to save the output path!

Is there a reason why I'm getting randomly images generated without any input from me ? I generate an image then it runs again and outputs something else

An example of info from one of the text files associated with the rogue image.

The latest Patreon version does not support the 16xx series yet. And you need the 9$ tier for the downloads. Might want to wait until half precision can be turned of though

I tried it on my with a OptiPlex 990 / 16 GB RAM with GeForce GT 710 but I get this error:

File "torch\nn\modules\module.py", line 925, in convert return t.to(device, dtype if t.is_floating_point() or t.is_complex() else None, non_blocking) RuntimeError: CUDA out of memory. Tried to allocate 44.00 MiB (GPU 0; 2.00 GiB total capacity; 1.57 GiB already allocated; 20.93 MiB free; 1.63 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

Anyone know if there anything I can do to get it to run on this machine?

Thanks for your advice. When you say turn down resolution, what do you mean? I dropped the Resolution down to 64x64 but I got the same error. Currently I have 16 GB RAM. How much RAM is the minimum requirement? Thanks again!

2 GB, so I see I need 4 GB. Hm. Someone recently mentioned that I might be able to create a virtual machine and up the VRAM using it, but I'm not sure if that would work given the NVIDIA CUDA requirements. Hmm... Maybe I need to upgrade my hardware after all.

Hey GRisk, amazing tool! I'm also running a 1660 Ti GPU, so no results for me right now. Would it be too much to ask to add a feature to disable the half-precision mode that's at the hearth of why it's not running on 1600 series GPUs?

it works on my old 1080, and I think I saw 1070 working somewhere. Other than these you would want an RTX 20xx or RTX30xx series All Nvidia, no AMD support

Is there any way to manually update the libraries from official sources so that this app uses them? As written below, a new version is out, want to try it

← Return to tool

Comments

Log in with itch.io to leave a comment.

my Ndivia MX330 2G error : RuntimeError: CUDA out of memory. Tried to allocate 2.00 MiB (GPU 0; 2.00 GiB total capacity; 1.67 GiB already allocated; 0 bytes free; 1.74 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

What solutions ?

Edit: nvm, I know what's wrong now, the software will not allow rendering images at a bigger resolution than 512x512, as soon as you try something more HD will give the error... So in conclusion, this is just a dummy testing tool. Not wort the time downloading.:::::::::::::

Getting the same error.My Nvidia RTX 2060 GDDR6 6GBBummer, Nvidia only.

GRisk, works great for me, looking forward to any updates you release. Thanks for creating this.

Very Nice, I wouldn't recommend it tho, If you make an image with less than 512 by 512 res, it will look ugly, and not very detailed,

It'd be nice not to get the "Forbidden Error" when trying to download this.... riiiiight?

Worked really well up until I got the following error. Now it occurs every time, regardless of my chosen settings. I know it's not my RAM or VRAM, as I'm running 32gb of RAM and my 3070 has 8gb of VRAM. Any known workarounds/fixes?

Error message:

Traceback (most recent call last):

File "start.py", line 363, in OnRender

File "torch\autograd\grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "diffusers\pipelines\stable_diffusion\pipeline_stable_diffusion.py", line 141, in __call__

File "torch\nn\modules\module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "diffusers\models\unet_2d_condition.py", line 150, in forward

File "torch\nn\modules\module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "diffusers\models\unet_blocks.py", line 505, in forward

File "torch\nn\modules\module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "diffusers\models\attention.py", line 168, in forward

File "torch\nn\modules\module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "diffusers\models\attention.py", line 196, in forward

File "torch\nn\modules\module.py", line 1130, in _call_impl

return forward_call(*input, **kwargs)

File "diffusers\models\attention.py", line 254, in forward

RuntimeError: CUDA out of memory. Tried to allocate 2.25 GiB (GPU 0; 8.00 GiB total capacity; 4.40 GiB already allocated; 0 bytes free; 6.60 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

Oddly, as soon as I posted here it began working again, no settings changed. Neat feature!

it seems like this thing is very unstable for the most part.

i have some question:

1. is this safe for my device? some comments says their gpu died after using this because their gpu are overclocked. I have an RTX 3060 12GB OC Edition by Zotac, its OC from the box so im not sure if its the same as manual OC.

2. is this uncensored?

3. how long does it take to generate an image?

2. It's uncensored

3. I've RTX 3070 8GB and it takes 15s to create a 50 iteration image.

out of curiosity, how long do you think this would take on Intels integrated graphics?

Can say an exact guess but it'll take much longer. Let's just download the package and try it. But you need at least 8GB VRAM to get 512x512 px images. less then this size is pretty small and useless.

ty so much for this, i have been looking for an easy to install gui everywhere for SD that actually works with gtx 16xx cards

My pleasure. Happy prompting!

Hi there, can you post this again but without changing the font size? It really pollute the comments.

Question, can this AI make 1080p or 4k images?

(Going to be getting a RTX 3090 FTW in the future)

Getting errors everytime I open the program:

torchvision\io\image.py:13: UserWarning: Failed to load image Python extension:

torch\_jit_internal.py:751: UserWarning: Unable to retrieve source for @torch.jit._overload function: <function _DenseLayer.forward at 0x00000292B4667D30>.

warnings.warn(f"Unable to retrieve source for @torch.jit._overload function: {func}.")

torch\_jit_internal.py:751: UserWarning: Unable to retrieve source for @torch.jit._overload function: <function _DenseLayer.forward at 0x00000292B468F0D0>.

warnings.warn(f"Unable to retrieve source for @torch.jit._overload function: {func}.")

The program still works as expected

I recently downloaded the Stable Diffusion for PC and git a black image. I was advised to replace my graphic card.

After replacing my NVIDIA GeForce 1650 card with Gigabyte GeForce RTX 3060 Ti Eagle OC 8G I tried to run Stable Diffusion on my PC but got the following error:

torchvision\io\image.py:13: UserWarning: Failed to load image Python extension:

torch\_jit_internal.py:751: UserWarning: Unable to retrieve source for @torch.jit._overload function: <function _DenseLayer.forward at 0x0000019888E120D0>.

warnings.warn(f"Unable to retrieve source for @torch.jit._overload function: {func}.")

torch\_jit_internal.py:751: UserWarning: Unable to retrieve source for @torch.jit._overload function: <function _DenseLayer.forward at 0x0000019888E12310>.

warnings.warn(f"Unable to retrieve source for @torch.jit._overload function: {func}.")

Render Start

Traceback (most recent call last):

File "start.py", line 316, in OnRender

File "start.py", line 207, in SavePrefab

PermissionError: [Errno 13] Permission denied: 'config_user.json'

Can anyone provide any advice?

Many thanks in advance!

The overclocking could be the main reason why you're just getting black images.

When I put my gpus clock to regular it worked normally.

I don't black images anymore. All I get is the list of errors attached in my earlier message.

Any ideas how to solve this problem? Is there a user manual for Soft Diffusion?

Sound like the tool is not allowed to create the config file. did you put it into "programs (x86)"? Try moving it to a temporary folder on your harddrive and see if it still happens

The Worst SD-GUI, I have tested so far! A lot of errors and bad performance! Absolutely not optimized at all!

Lacking a lot of features like RealESRGAN, GFPGAN, selectable gpu device, txt2img & img2img samplers, on the fly model switching, image viewer, masking, custom concepts, img2img, img2prompt, and so on... Absolute waste of time and disk space!!!

what is one you recommend?

Yeah, 0.1 is pretty awful, but the paid version is currently on 0.41 and it's practically magical in terms of performance and speed, and includes a good chunk of the stuff you've listed.

Definitely has the lowest VRAM usage out of all the AI GUIs too I think, especially since they added the low VRAM usage toggle. I'm pretty sure some people are rendering with 3GB VRAM and making pretty solid images at a decent speed, too.

I'd really like to know when/if GRisk ever plans to update the free version, or if it's just doomed to stay as some janky unfinished load of WIP to serve as a "demo" for the paid version.

where can I pay for it, does this work on Mac as well?

(How much is it btw?)

No idea about Mac, but https://www.patreon.com/DAINAPP/posts

torchvision\io\image.py:13: UserWarning: Failed to load image Python extension:

torch\_jit_internal.py:751: UserWarning: Unable to retrieve source for @torch.jit._overload function: <function _DenseLayer.forward at 0x000001C0E1363820>.

warnings.warn(f"Unable to retrieve source for @torch.jit._overload function: {func}.")

torch\_jit_internal.py:751: UserWarning: Unable to retrieve source for @torch.jit._overload function: <function _DenseLayer.forward at 0x000001C0E13638B0>.

warnings.warn(f"Unable to retrieve source for @torch.jit._overload function: {func}.")

//how to fix this//

Did you solve this? I have the same problem

I'm pretty sure it's only a warning that you can ignore

https://discuss.pytorch.org/t/issue-with-torch-git-source-in-pyinstaller/135446/...

Yes, I think it's because you can't use images as a source unless you pay $8 a month!

I love it !!!!

Can anyone get the inpainting working? I tried several way doesn't seem to work. Maybe it's only partially implemented as I don't see a way to upload the original file and the mask.

i have the same problem, where and how do we actually paint:P

Every time its render like this black image

anyone knows how to fix this

what computer do you have? and settings on the gui

asus tuf fx505dt

GTX 1650 4GB VRAM

Settings are default on the gui

Kinda sad that I can't use it at the moment due to having a 1660, but if it gets patched and fixed in the future I'll be hella happy to use it.

WARNING: this software killed my gpu. gtx 1070. been using it for 6 years with no issues. used this to render ~100 images and it's completely dead. so uh, maybe don't run this with your card overclocked? idk, weird

Neural networks, in principle, cannot work with AMD due to the lack of cuda.

No it will work with AMD. You will have to build it with pytorch thats based on AMD Radeon open compute (RocM).

Can you Make short video or instructable for that?

It will be really useful for a noob like me.

Got these images using prompts like - "old stone castle ruins in the rain with a river running alongside it and ivy growing on it"

Works great on my humble 1060 with 6GB

Generated the following image with user Ramonisai's prompt text

" beautiful girl longshot, red hair, flower crown, hyper realistic, pale skin, 4k, extreme detail, detailed drawing, trending artstation, hd, fantasy, d&d, realistic lighting, by alphonse mucha, greg rutkowski, sharp focus, elegant"

beautiful girl longshot, red hair, flower crown, hyper realistic, pale skin, 4k, extreme detail, detailed drawing, trending artstation, hd, fantasy, d&d, realistic lighting, by alphonse mucha, greg rutkowski, sharp focus, elegant"

how much time when generating this picture?

1 minute 20 seconds

Can anyone with a 3090 Ti confirm their highest resolutions possible as a result? Looks like I can max out at 768 by 512 on my 2080 Ti. Any examples would be appreciated.

Thanks for confirming. Shortly after I ended up reading a ton of articles and it appears it wouldn't matter much anyways, as they were trained at 512x512 resolution to begin with, which makes sense as to limits and images tending to overlap or duplicate when you set the output higher. And you're right, 768x512 is really perfectly fine, I was just curious but knowing more now realize that there wouldn't be much benefit of a 3090 Ti over a 3080 Ti... at least, yet.

I can do 1080x1080 on the updated version of the GUI using my 8GB 2070 Super, and still have enough VRAM left over to fiddle around in Blender while the GUI does its work.

It's kinda annoying that they're paywalling such big updates, I hope they plan to update the Itch version soon so people don't have to pay $10 a month for access to a bunch of different services they probably wont use, just to get the bugfixes, content, and optimization of the newer versions of the GUI.

if this works for me was wodering if these are real random generations and if so i could use em in my own programs right?

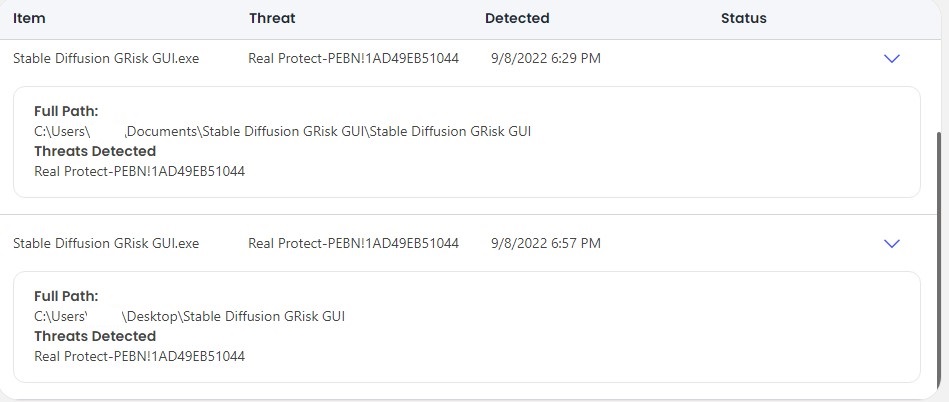

McAfee has identified "Stable Diffusion GRisk GUI.exe" as containing a virus. Is anyone else getting this?

GRisk GUI.exe" as containing a virus. Is anyone else getting this?

who tf uses mcafee :skull:

I guess people that buy a new computer that comes with a subscription! XD

Doesn't really work. Worked fine once, then now every time I try to render something it just keeps saying CUDA out of memory, and this persists even after a hard restart. I have a 2080ti with 11G of ram, and the program can't even allocate 3GB to render a file. It seems the program is allocating memory that it never releases after its done, and as such each subsequent load hogs up GPU memory.

I don't think the program, or the Stable Diffusion implementation, is ready for prime time yet. The limit of 512x512 is about as far as I can render, which is also really low resolution - and even with Gigapixle the quality is subpar to other programs like Midjourney.

Sorry to hear it's not working for you. I've got a RTX 2070 works great (8GB of VRAM). Maybe Midjourney is a better option for you if you don't mind using Discord.

Random stuff I've generated.

Some really nice Ai images... well done... Especially loving the battleships at sea... gorgeous

Strange, I also have a 2080ti but i'm able to allocate pretty much all of my VRAM. I'm able to get away with 576x576 before maxing out.

I can do up to 1024 x 1024 with my 2070 (with Patron GUI mind you)

Holy shit i'm buying

I love this tool so much that I decided to become a Patron! It's so useful to have a separate txt file with the settings used for each generated image (as opposed to have it in the file name of the image like the Dreamstudio). I'm just downloaded GUI version 0.41 and I was happily surprised to find out I can generate larger images, samples per prompt works and there is an upscaler as well as inpainting!!! (although I haven’t figure out how it works)

Anyway, I thought I’d share this since more people might decide to support the development of this GUI as it’s not advertised anywhere what’s in the Patreon version (or at least I didn’t know, I just thought I was giving money to say thank you!).

Are there plans to add GPEN or GFPGAN to fix faces? That would be amazing, along with the ability to save the output path!

Is there a reason why I'm getting randomly images generated without any input from me ? I generate an image then it runs again and outputs something else

An example of info from one of the text files associated with the rogue image.

{'text': '', 'folder': '.\\results', 'resX': 512, 'resY': 512, 'half': 1, 'seed': 3314742242, 'origin': None, 'origin_W': None, 'steps': 100, 'vscale': 8.0, 'samples': 22}

That happens when there are empty lines in your text field. It doesn't know what to do with it, so it generates random stuff

Does the Patreon version support full precision so we can use the 1650 and 1660 cards? And which tier do we need to be on to access the downloads?

The latest Patreon version does not support the 16xx series yet. And you need the 9$ tier for the downloads. Might want to wait until half precision can be turned of though

Thanks, I guess I'll be sticking to the command line version.

I tried it on my with a OptiPlex 990 / 16 GB RAM with GeForce GT 710 but I get this error:

File "torch\nn\modules\module.py", line 925, in convert return t.to(device, dtype if t.is_floating_point() or t.is_complex() else None, non_blocking) RuntimeError: CUDA out of memory. Tried to allocate 44.00 MiB (GPU 0; 2.00 GiB total capacity; 1.57 GiB already allocated; 20.93 MiB free; 1.63 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

Anyone know if there anything I can do to get it to run on this machine?

either turn down resolution or get more ram

Thanks for your advice. When you say turn down resolution, what do you mean? I dropped the Resolution down to 64x64 but I got the same error. Currently I have 16 GB RAM. How much RAM is the minimum requirement? Thanks again!

i think its your vram actually, can you check how much vram you have?

2 GB, so I see I need 4 GB. Hm. Someone recently mentioned that I might be able to create a virtual machine and up the VRAM using it, but I'm not sure if that would work given the NVIDIA CUDA requirements. Hmm... Maybe I need to upgrade my hardware after all.

Hey GRisk, amazing tool!

I'm also running a 1660 Ti GPU, so no results for me right now.

Would it be too much to ask to add a feature to disable the half-precision mode that's at the hearth of why it's not running on 1600 series GPUs?

I have a NVIDIA GeForce 1650 card. All I get is a black png. Any idea how to solve this problem?

The models sadly don't work with 16xx series cards

Thank you so much for your answer! Do you know on which cards it does work?

it works on my old 1080, and I think I saw 1070 working somewhere. Other than these you would want an RTX 20xx or RTX30xx series

All Nvidia, no AMD support

Thank you so much!

Hi, SD 1.5 out! Is there any chance to upgrade?

SD 1.5 is not available for download as far as I know. Only available on dreamstudio yet.

can it work on a GTX 1070 with CUDA?

Works fine on my GTX 970 4Gb VRAM

I'm only getting black pngs. Can anyone tell me what's going on

Do you have a GTX 16xx series Card?

Mine is GTX 1650 and I also get black images

i'm the same comdition . maby check out the top information in this page will help you . graphic card have to surpport CUDA,1650 may not includded

Is there any way to manually update the libraries from official sources so that this app uses them? As written below, a new version is out, want to try it

Stable Diffusion 1.5 version is out! Curious to find out what has been improved. Hands hopefully among other things! Can't wait to try it.

Version 1.5 is not available for download yet afaik

Yes on DreamStudio web interface but should be available to download soon I heard.

the patreon version uses th same model as the itch one. just the GUI features are different

I don't know if it's "malicious". He's done work, he wants to get compensated.

Don't have your hopes too high for v 1.5